HPCC leverages in-network telemetry (INT) to control traffic precisely.

Introduction

HPCC:

- adapts quickly

- keeps queue empty to maintain extremely low latency

- less parameters to adjust fairness & efficiency

motivation

the large RDMA deployments

Clos topology with three layers: ToR (top of rack, edge), Agg, Core.

Each PoD (point of delivery) is an independent RDMA domain. Diff PoD are interconnected by Core switches.

DCQCN, PFC, go-back-N is adopted.

target

- fast converge

- close-to-empty queue

- few parameters

- fairness

- easy to deployment

some considerations:

- Compared to TCP/IP, RDMA is at higher risk, since they start at line rate.

- PFC has destructive impact. 1. storms 2. deadlocks 3. suppress a large number of innocent senders

- DQCQN has a large number of parameters need to tune carefully, and there exists trade-offs like throughput vs stability; bandwidth vs latency and more.

Hopefully, INT has been enabled on many new switches, and programmable NIC is being capable.

design

INT supports some meta-data of each switches: ts, qlen, txBytes, B(link bandwidth).

HPCC is based on inflight bytes, which means the cwnd remains below BDP. In this situation, the queue remains empty.

The start speed is $W_{init}=B_{NIC}\times T$, where $B_{NIC}$is the NIC bandwidth. The sending rate is paced to avoid burst. The pacing rate is $R=W/T$, T is the base RTT. Note that in DCN, the base RTT is similar.

HPCC uses INT information to adjust the rate. MIMD+AI to converge quickly and keep fair.

For a link j, we have $I_j = \sum W_i$, where $W_i$ is the i-th flow’s window. Thus we need to keep $I_j \leq \eta \times B\times T$, where $\eta$ denotes the utilization of the link, usually 95%.

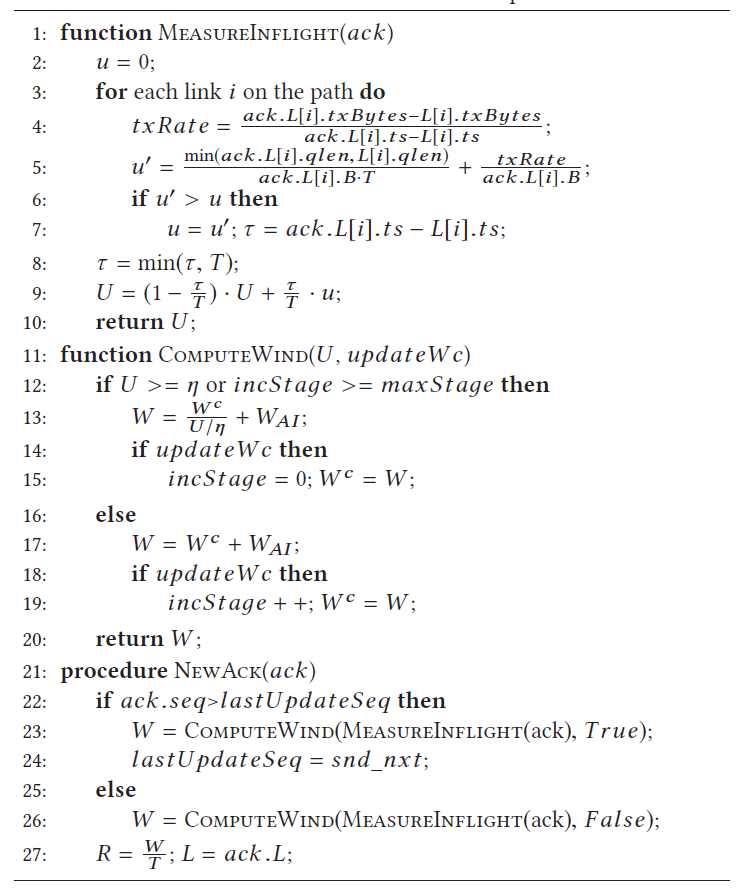

We can estimate the link usage by INT information. $I_j = qlen + txRate \times T$, and $txRate = \frac{ack_1.txBytes-ack_0.txBytes}{ack_1.ts-ack_0.ts}$. This emulation assumes the RTTs of all flows are the same.

In this way, we can find the bottleneck link. Each sender multiplicatively reduce its window by a factor of $k_j=I_j/\eta \times B\times T=U_j/\eta$, where $U_J$ is the normalized inflight bytes of link j:

Sender i react as:

$W_{AI}$ is the AI part, which is very small, $W_{AI} = W_{init} \times (1-\eta)/N$, where N is the expected maximum concurrent flows on a link.

To prevent overreaction is a single RTT, HPCC uses a reference window size $W_i^c$, updated each RTT.

As shown in the algorithm, only three parameters to set. $\eta, maxStage, W_{AI}$. $\eta$ controls the tradeoff between utilization and transient queue length. maxStage controls the tradeoff of steady state stability and the speed to reclaim free bandwidth, usually 5.$W_{AI}$ controls the tradeoff between the maximum number of concurrent flows on a link to keep near-zero queues and the speed of convergence to fairness.